Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Upgrading to Future

Upgrading to Future

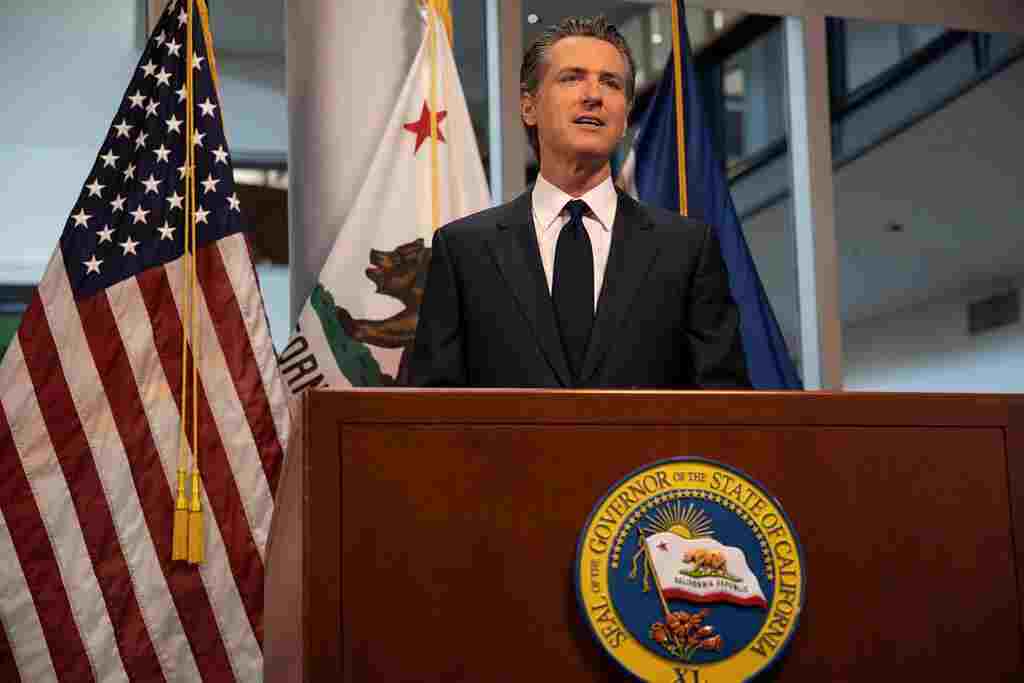

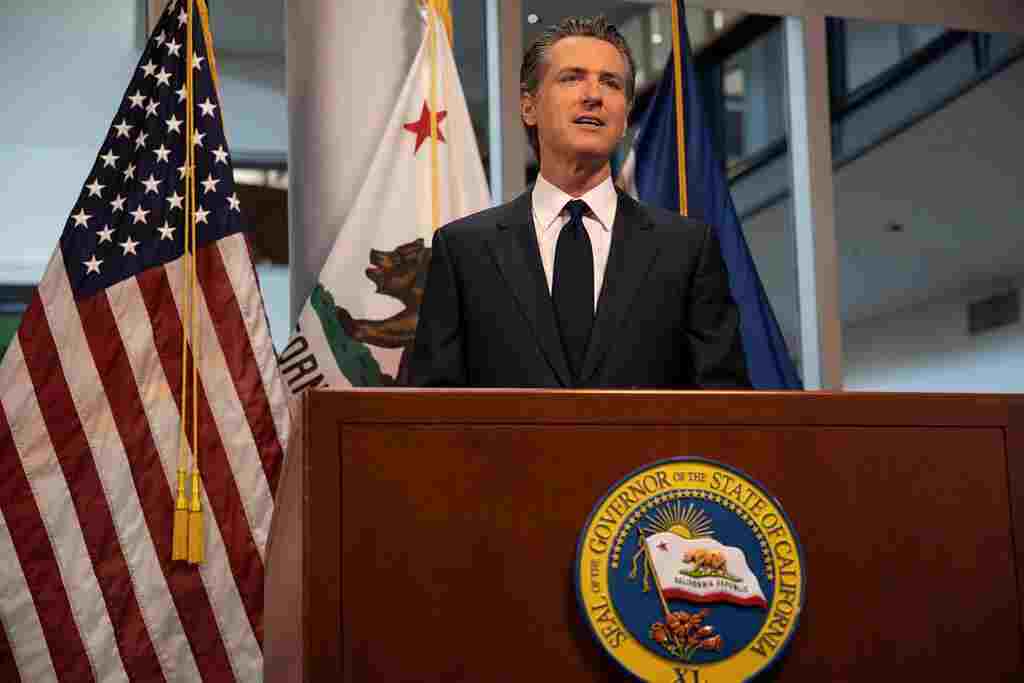

As artificial intelligence (AI) continues to evolve at breakneck speed, California’s newly released AI policy report is making international headlines. Commissioned by Governor Gavin Newsom and published in June 2025, the report is a comprehensive roadmap for AI safety and accountability—and it’s already being considered a model for future AI regulation around the world.

With global interest surging (over 4,000 monthly searches and climbing), this report doesn’t just offer vague guidelines. It delivers actionable AI governance strategies, highlights imminent threats, and calls for real-time accountability in AI development—particularly in high-stakes sectors like bio-engineering and cyber-security.

This 53-page report, shaped by a state-appointed working group led by world-renowned AI researcher Dr. Fei‑Fei Li, lays out a stark warning: if left unchecked, advanced AI technologies could cause “irreversible societal harms.”

Here are the key takeaways, explained in depth:

One of the most alarming parts of the report addresses the use of AI in biological engineering. Tools like large language models (LLMs) are increasingly capable of generating detailed instructions for creating pathogenic viruses or chemical weapons. The report flags this as a biosecurity crisis in the making unless strict safeguards are introduced.

🔎 Why it matters: These AI models are becoming “superhuman” in knowledge application but are being deployed with almost no oversight in scientific communities.

While AI’s intersection with nuclear capabilities has been largely theoretical until now, the report acknowledges growing concern. Advanced models might, in the wrong hands, offer insights into nuclear technologies, military strategy, or the creation of autonomous weapons.

📍 Recommendation: Development of “red lines” for prohibited research applications of AI, along with federal-level oversight mechanisms.

The report proposes independent third-party audits of major AI systems before and after deployment. This includes validating whether AI models claiming to be “safe” are indeed aligned with human values and control limits.

📌 Example: A company building a general-purpose AI model must undergo rigorous, documented testing before scaling.

Many tech employees working in AI companies fear retaliation for raising safety concerns. The report strongly advocates for whistleblower protection laws to ensure these voices are protected, heard, and empowered to act in the public’s interest.

💡 What this enables: Greater transparency within big tech companies developing frontier AI models.

The state is also calling for a centralized AI incident reporting system, where AI-related failures, misuses, or near-miss events must be reported to regulators and made partially public.

🏛️ Policy Goal: Prevent small-scale failures from becoming systemic threats, much like aviation or financial regulation models.

California is not just home to tech giants like OpenAI, Google, and Meta—it’s also a pioneer in emerging technology governance. By taking the lead on AI regulatory policy, the state may influence U.S. federal legislation and international frameworks on artificial intelligence governance.

🧭 Looking Ahead: The report encourages collaboration between government, academia, and private industry to implement these standards without stifling innovation.

California’s 2025 AI policy report is more than a warning—it’s a strategic blueprint to prevent AI-driven disasters. From protecting public safety to establishing a regulatory framework for the most powerful technologies ever created, this report marks a critical shift in how we govern artificial intelligence.

With searches for “AI safety news,” “AI regulation 2025,” and “California AI policy” all trending upward, now is the time for developers, policymakers, and the general public to pay close attention.